Jie Shao (1,2), Wuming Zhang (3,4), Nicolas Mellado (2), Nan Wang (5), Shuangna Jin (1), Shangshu Cai(1), Lei Luo (6), Thibault Lejemble (2), Guangjian Yan (1).

(1) State Key Laboratory of Remote Sensing Science, Beijing Normal University, Beijing, China

(2) IRIT, CNRS, University of Toulouse, France

(3) School of Geospatial Engineering and Science, Sun Yat-Sen University, China

(4) Southern Marine Science and Engineering Guangdong Laboratory, China

(5) School of Remote Sensing and Information Engineering, Wuhan University, China

(6) Key Laboratory of Digital Earth Science, Chinese Academy of Sciences, China

ISPRS Journal of Photogrammetry and Remote Sensing, Vol. 163, May 2020

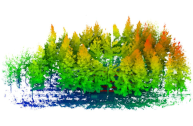

Precise structural information collected from plots is significant in the management of and decision-making regarding forest resources. Currently, laser scanning is widely used in forestry inventories to acquire three-dimensional (3D) structural information. There are three main data-acquisition modes in ground-based forest measurements: single-scan terrestrial laser scanning (TLS), multi-scan TLS and multi-single-scan TLS. Nevertheless, each of these modes causes specific difficulties for forest measurements. Due to occlusion effects, the single-scan TLS mode provides scans for only one side of the tree. The multi-scan TLS mode overcomes occlusion problems, however, at the cost of longer acquisition times, more human labor and more effort in data preprocessing. The multi-single-scan TLS mode decreases the workload and occlusion effects but lacks the complete 3D reconstruction of forests. These problems in TLS methods are largely avoided with mobile laser scanning (MLS); however, the geometrical peculiarity of forests (e.g., similarity between tree shapes, placements, and occlusion) complicates the motion estimation and reduces mapping accuracy.

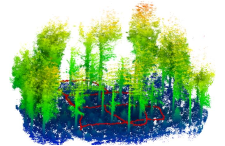

Therefore, this paper proposes a novel method combining single-scan TLS and MLS for forest 3D data acquisition. We use single-scan TLS data as a reference, onto which we register MLS point clouds, so they fill in the omission of the single-scan TLS data. To register MLS point clouds on the reference, we extract virtual feature points that are sampling the centerlines of tree stems and propose a new optimization-based registration framework. In contrast to previous MLS-based studies, the proposed method sufficiently exploits the natural geometric characteristics of trees. We demonstrate the effectiveness, robustness, and accuracy of the proposed method on three datasets, from which we extract structural information. The experimental results show that the omission of tree stem data caused by one scan can be compensated for by the MLS data, and the time of the field measurement is much less than that of the multi-scan TLS mode. In addition, single-scan TLS data provide strong global constraints for MLS-based forest mapping, which allows low mapping errors to be achieved, e.g., less than 2.0 cm mean errors in both the horizontal and vertical directions.

Bibtex

@article{SHAO2020214,

title = {SLAM-aided forest plot mapping combining terrestrial and mobile laser scanning},

journal = {ISPRS Journal of Photogrammetry and Remote Sensing},

volume = {163},

pages = {214-230},

year = {2020},

issn = {0924-2716},

doi = {https://doi.org/10.1016/j.isprsjprs.2020.03.008},

url = {https://www.sciencedirect.com/science/article/pii/S0924271620300782},

author = {Jie Shao and Wuming Zhang and Nicolas Mellado and Nan Wang and Shuangna Jin and Shangshu Cai and Lei Luo and Thibault Lejemble and Guangjian Yan},

keywords = {Forest mapping, LiDAR, SLAM, Single-scan TLS, MLS},

}