Automatic shape adjustment at joints for the implicit skinning

Olivier Hachette, Florian Canezin, Rodolphe Vaillant, Nicolas Mellado, Loic Barthe.

CNRS, IRIT, Université de Toulouse, France.

Elsevier Computer&Graphics (proc. of SMI 2021)

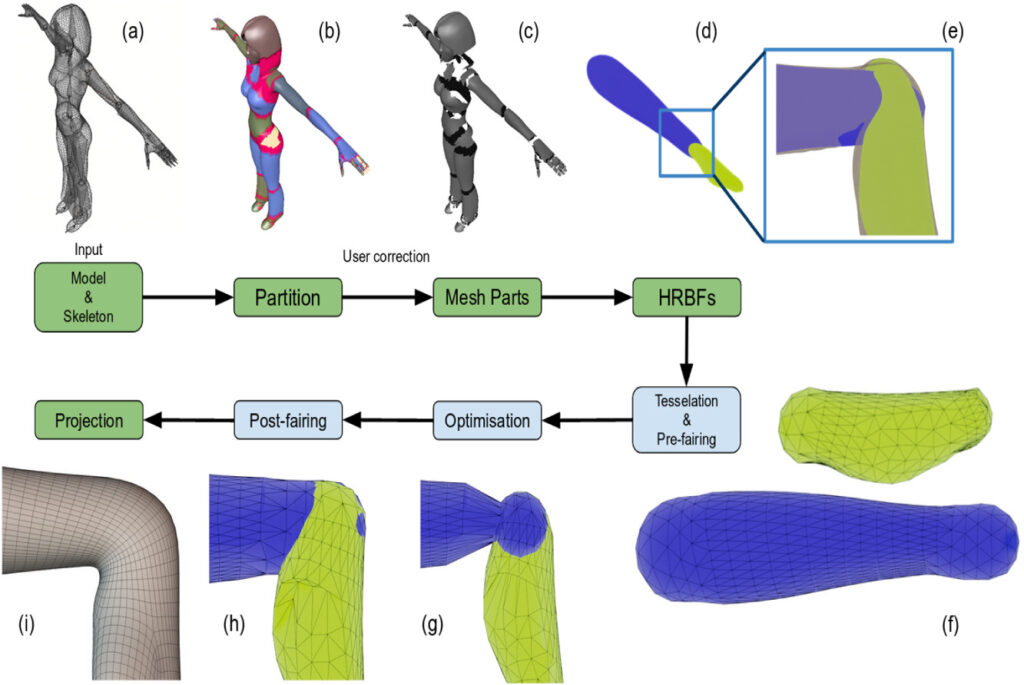

The implicit skinning is a geometric interactive skinning method, for skeleton-based animations, enabling plausible deformations at joints while resolving skin self-collisions. Even though requiring a few user interactions to be adequately parameterized, some efforts have to be spent on the edition of the shapes at joints.

In this research, we introduce a dedicated optimization framework for automatically adjusting the shape of the surfaces generating the deformations at joints when they are rotated during an animation. This approach directly fits in the implicit skinning pipeline and it has no impact on the algorithm performance during animation. Starting from the mesh partition of the mesh representing the animated character, we propose a dedicated hole filling algorithm based on a particle system and a power crust meshing. We then introduce a procedure optimizing the shape of the filled mesh when it rotates at the joint level. This automatically generates plausible skin deformation when joints are rotated without the need of extra user editing.

Bibtex

@article{HACHETTE2021,

title = {Automatic shape adjustment at joints for the implicit skinning},

journal = {Computers & Graphics},

year = {2021},

issn = {0097-8493},

doi = {https://doi.org/10.1016/j.cag.2021.10.018},

url = {https://www.sciencedirect.com/science/article/pii/S0097849321002296},

author = {Olivier Hachette and Florian Canezin and Rodolphe Vaillant and Nicolas Mellado and Loïc Barthe},

keywords = {Shape deformation, Geometric modeling, Skinning},

}