Clinical relevance of speech intelligibility measures

Context

Intelligibility can be defined as an analytical, acoustic-phonetic decoding notion – i.e. addressing “low-level” linguistic units –, referring to the quality of pronunciation at the segmental (phoneme and syllable) levels.

Speech intelligibility is usually assessed using perceptual tasks. While these are informative, they entail several drawbacks, as they rely on subjective ratings, biased, among other factors, by the familiarity of the rater with the subject’s speech and with the test stimuli. They are also usually time-consuming and are often limited to single word stimuli which are not representative of the speaker’s verbal communication in a natural communication context.

To address these drawbacks, accurate and reliable objective tools are required, the measures of which have to be based on the most recent data of scientific research.

Overview

The aim of this project is to develop an objective speech intelligibility assessment tool for adults, based on the most recent data from the scientific literature as well as on the data provided by clinicians and speech experts.

There are several axes in this project, the first of which are “state of the art” studies aimed at gathering a wide range of relevant information in order to build a new speech assessment tool on solid foundations. These can be organized according to the three pillars of evidence-based practice (EBP):

- Best research evidence from the scientific literature:

- Clinical experience:

- Patient values:

The remaining, more practical axes, focus on the use of data gathered from the previous points to explore elements for a new speech assessment tool:

- Speech material: creating a new standardized reading passage for speech and voice assessment

- Speech banana: acoustic analysis to build a “consonant space”

- Spectral moments: describing non-sonorants using a statistical representation of the spectrum

Systematic review

Context: A systematic review was carried out to investigate papers analyzing the link between acoustic measures in healthy adult speakers and perceived speech intelligibility.

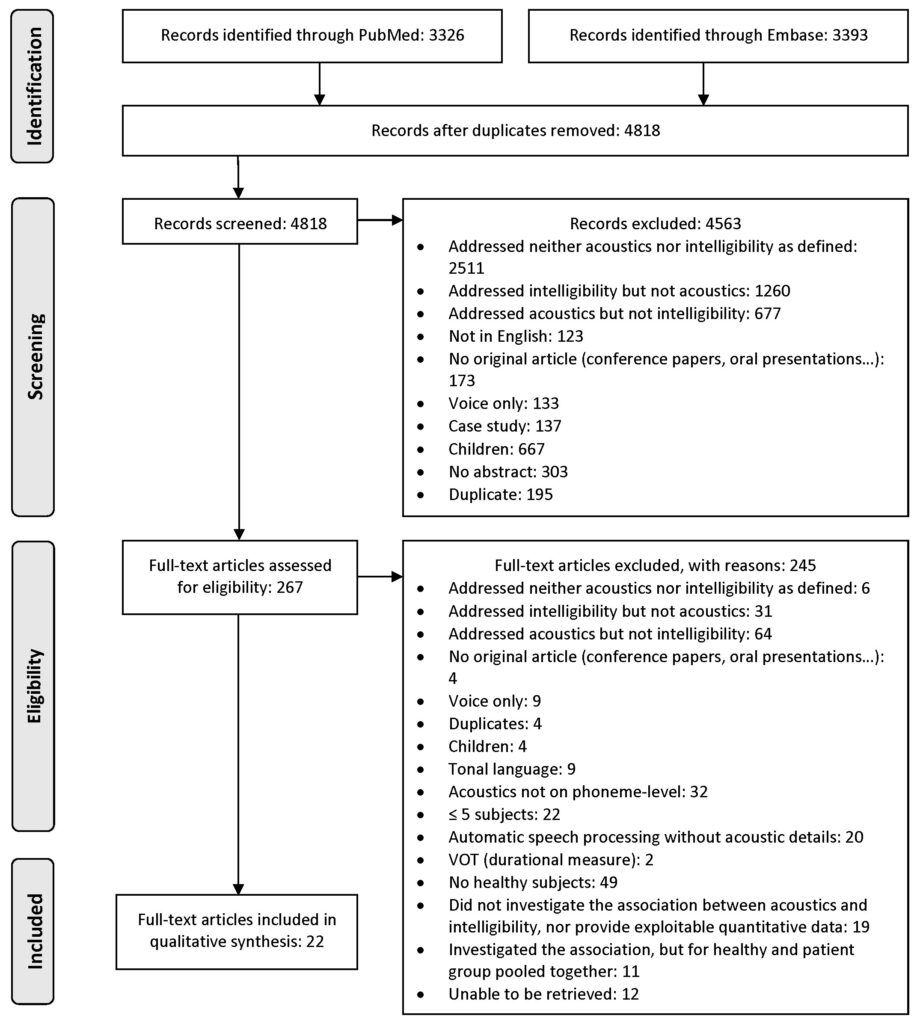

Materials and methods: This review was carried out according to the PRISMA guidelines. Two independent raters selected articles from the Embase and Pub-Med databases.

Results: Twenty-two studies were retained (see Fig. 1 for the selection process). Eighteen papers investigated vowel acoustics, one studied glides and eight articles investigated consonants, mostly sibilants. Various acoustic measures and intelligibility estimates were used. Following measures were shown to be linked to sub-lexical perceived speech intelligibility ratings: for vowels, steady-state F1 and F2 measures, the F1 range, the [i]-[u] F2 difference, F0-F1 and F1-F2 differences in [ɛ-ɑ] and [ɪ-ɛ], the vowel space area, the mean amount of formant movement, the vector length and the spectral change measure; for consonants, the centroid energy and the spectral peak in the [s]-sound, as well as the steady-state F1 offset frequency in vowels preceding [t] and [d].

Conclusion: Speech is “imperfect” and highly variable even in healthy adult speakers. Intelligibility is a complex notion that is defined in many different ways in the scientific literature, leading to very dissimilar analysis methods according to the background and the aims of the authors. Our results highlight the need to first have a better understanding of the behavior of acoustic markers in the healthy population, to provide normative data gathered from a large number of healthy speakers, in order to then be able to build hypotheses on specific pathological populations.

Online survey

Given the variety of available speech measures in adults, the objective of this online survey was to describe the current practices of French-speaking clinicians regarding speech assessment, as well as the reported lacks regarding existing tools.

Materials and methods: Data were collected using an online questionnaire for French-speaking speech and language pathologists in Belgium, France, Switzerland and Luxembourg. Forty-nine questions were grouped into six domains: participant data, educational and occupational background, experience with speech disorders, patient population, tools and tasks for speech assessment, and possible lacks regarding the current assessment of speech intelligibility.

Results: Speech-language pathologists use a variety of tasks and speech samples, building up “à la carte” assessments. Pseudo-words are rarely used and are absent from standardized batteries. The major use of words and sentences indicates that the assessment mainly focuses on notions of comprehensibility, and not of intelligibility in the analytical sense of the term. The duration of the tests (48 minutes on average) is relatively long, especially given the fatigability of the patients. The equipment used to record the patients for the speech assessment is not standardized and few speech and language pathologists seem to be comfortable with the different recording parameters. In addition, perceptual evaluation largely prevails, given the scarcity in currently available objective measures. Clinicians note a lack of objectivity and reproducibility in speech assessment measures, which are more qualitative than quantitative in nature, even when using “formal” assessment batteries. Other shortcomings mentioned relate to the exhaustiveness of the evaluation and the consideration of specific speech parameters (prosody, flow and nasality), as well as the practicality of the evaluation tools (duration, cost, accessibility and ease of use).

Conclusion: This study highlights a lack of standardization of the speech assessment in adults. It underlines the need to offer new tools that are objective, reproducible, easy to use and financially accessible, allowing for a simple, accurate, rapid, ecological and relevant assessment in the context of multiple pathologies that can affect speech. The automation of these tools would meet the criteria of reliability and speed.

Speech expert interviews

To supplement the clinicians’ data collected via the online survey, semi-directive interviews of speech experts are also carried out internationally. The main research questions are: How is speech intelligibility defined and assessed? What are the shortcomings of currently existing tools?

Fourteen experts from three domains were interviewed: speech and language pathology researchers (6), phoneticians/linguists/neuropsychologists/audiologists (4) and computer scientists (4).

These experts are located in the USA (3), France (3), Australia (2), England (2), Belgium, the Netherlands, Germany and Sweden. The interviews have been transcribed, then analyzed by two independent raters who identified the main answers to the interviewer’s questions. The data from the different interviews are being summarized in order to highlight the main points reported by the speech experts.

The patients’ point of view

A questionnaire has been implemented to get the opinion of 60 patients (30 with neurological speech disorders and 30 with morphological speech disorders). In this questionnaire, the patients are asked about the speech assessment procedure (how it was conducted, how they felt about it, the perceived lacks and needs of the patients regarding their speech assessment…).

Speech material: reading passages (working group and Delphi project)

Various reading passages exist, none of which, however, fully meets the clinicians and researchers needs for the assessment of speech and voice. Therefore, a working group of ten francophone experts (2 ENTs, 4 linguists, 4 SLPs in Belgium, France and Canada) has been created. The aim of this group is to create a new standard reading passage in French that meets a maximum of criteria needed for speech and voice assessments (phonetic balance, prosody, word frequencies and lengths…), in order to allow, inter alia, the application of acoustic measures on a more functional material.

The main criteria to control when creating the new reading passage have been prioritized as shown in Fig. 2.

Early stages of this project led to an international DELPHI project. The Delphi technique is an extensively used group survey methodology that is conducted over several consecutive rounds and aims to reach a consensus among a panel of individuals with expertise (both professional and experience-based) in the investigated field. This study is quasi-anonymous: the identity of each participant is only known to the main investigator/moderator, and only via the provided email address (to monitor round-to-round response rates); participants remain anonymous to each other, which allows for freedom of expression without any social or professional pressure from peers.

Our Delphi survey is addressed to professionals (clinicians, researchers, lecturers) who are currently engaged in activities in at least one of the following fields:

- speech sound disorders (incl. dysarthria, apraxia/dyspraxia, orofacial structural deficits, head and neck oncology, velar insufficiencies, hearing impairment and articulation disorders)

- fluency disorders (stuttering/stammering)

- voice disorders

The international survey yields to seek agreement through an international decision-making process on what criteria should be taken into account when creating a new reading passage for speech/voice assessment. There also appears to be a lack of consensus regarding the terminology of speech-related concepts as well as the assessment methods, which in turn may influence the decisions taken when implementing new speech assessment materials.

The consensus survey is structured as follows:

- Definitions of speech-related concepts

- Perceptual and objective speech measures

- Criteria for creating standard reading passages

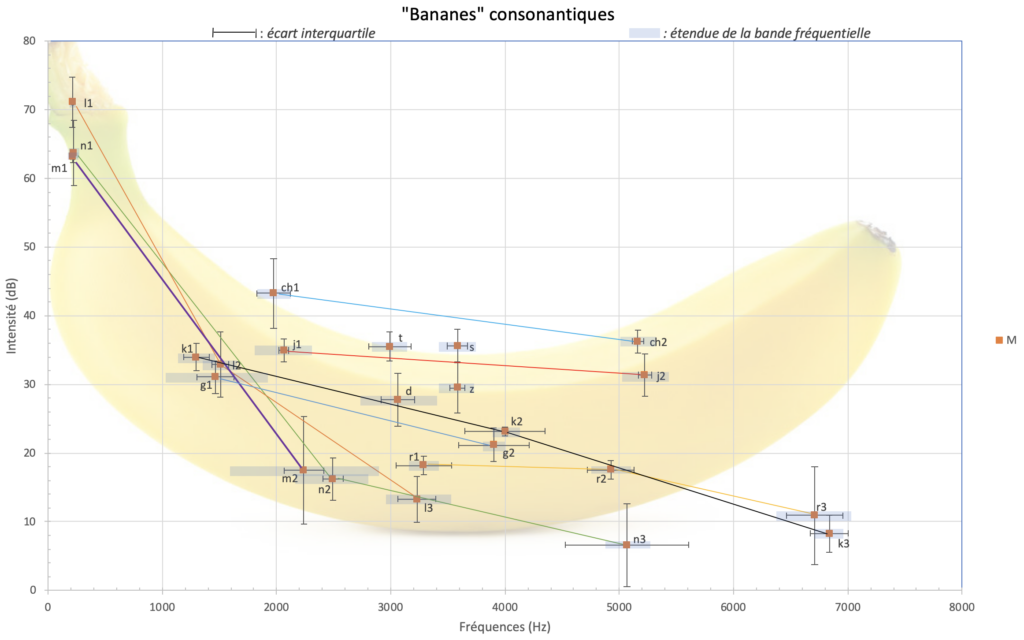

The speech “banana”

The vowel space area, computed by measuring the values of the first two vowel formants, is one of the measures used to assess speech intelligibility. Plotting the vowels on a two-axis graph (F1xF2) allows for the interpretation of the values of the patient’s vowels with reference to the “standard” vowel triangle. However, consonants are also of crucial importance in speech intelligibility, especially at the word level. Stevens and Blumstein already stated in 1978 that the place of articulation of the plosive consonants is identifiable by the global aspect of their spectral representation. In audiometry, the speech “banana” (Fig. 3) is the representation of the “standard” main frequency component of each consonant, as well as its intensity, allowing for the delimitation of a consonant area on an audiogram. This method allows the hearing care professional to estimate the impact of the hearing impairment on the speech perception by the patient, and thereby to adjust the frequency band amplification of the hearing aid accordingly.

This approach has been implemented for the consonants of English and Thai. For French, Béchet et al. have computed consonant areas on the plosive sounds [b, d, g], which represent the three most common articulation points on a universal scale, using F2 and F3. Our goal is to build the speech banana representing the 16 French consonants. In a pilot study, 15 healthy subjects (6 males, 9 females, median age: F=47, IQR=18, min=23, max=76; M=41, IQR=17; min=24; max=76) produced the 16 consonants in a carrier sentence, in a øCø vowel context. Drawing on the work of Klangpornkun (2013), we used linear predictive coding (LPC) to identify prominent spectral peaks for each consonant, according to the gender of the speaker. By positioning the consonants on a two-axis graph (frequency x intensity), we then generated the “banana” of the French consonants (see Fig. 4 for an example of the male speech “banana”). It should be noted that in view of the inter-subject variability of acoustic measures, the number of subjects in this preliminary study is limited. New recordings are being carried out in order to obtain speech samples from at least two men and two women in the age groups 20-29, 30-39, 40-49, 50-59, 60-69 and 70+. Also, as this method does not seem applicable to all the phonemes (e.g. fricatives are rather characterized by frequency bands, not peaks), additional cues have to be taken into account (e.g. intensity/duration of the burst in plosives, spectral moments, frequency bands…). Moreover, it has to be determined how to analyze the acoustic measures (e.g. do we focus on one main peak, or do we take several peaks into account? What would the absence of a sole peak signify?). Numerous questions thus still remain open and will be addressed during this research project. By automating the construction of such a representation of the consonants produced by a subject, we hope to eventually provide a tool for the objective assessment of speech intelligibility.

Spectral moments

After the preliminary study about the speech “banana”, the spectral moment measures seemed to be a promising lead to investigate in consonants. These measures are used in fricatives and in plosives, are linked to articulatory features, and might allow for a visual output. The consonant spectrum is considered as a statistical distribution, which can be described by four measures:

- « Centre of gravity »: the frequency that divides the spectrum energy into two halves

–> the CoG is negatively correlated with the length of the anterior resonance cavity (the further forward the constriction, the higher the CoG, e.g., higher in [s] than in [ʃ]) - « Standard deviation » : the dispersion of the spectral energy around the CoG

–> the SD differentiates a diffuse and flat spectrum ([f]) from compact and peaked spectra ([s] and [ʃ]) - « Skewness »: the asymmetry of the energy distribution with respect to the mean

–> positive values indicate a distribution that is skewed to the right, where the right tail extends further than the left one

–> skewness is linked to the articulation point; e.g., the energy concentration is below the mean for [ʃ] vs. [s] - « Kurtosis »: a measure of the peakedness of the distribution linked to the lingual posture

Thirty-seven healthy speakers were recorded reading aloud a list of 12 carrier sentences, with the structure “Le sac [øCø] convient”, where C stands for one of the 12 French non-sonorants: [b, d, g, p, t, k, v, z, j, f, s, ʃ]. The sample is composed of 18 female and 19 male speakers, with a median age of 29 (IQR: 24-47, min: 19, max : 79).

The four spectral moments (CoG, SD, SKEW, KURT) were computed for each extracted consonant, at three timepoints: at 15% (beginning), at 50% (mid-time) and at 85% (end) of the consonant. The Hamming window length was set to 40 ms for fricatives, and 20 ms for plosives. A pre-emphasis filter of 6 dB/octave above 100 Hz was applied, as the spectral moment measures are more accurate the closer the spectral distribution is to a normal curve. Furthermore, a 1000 Hz Hann-shaped high-pass filter with a smoothing of 100 Hz was used to reduce the possible lower frequency voicing effects. LPC spectra were also computed using Burg’s algorithm with a prediction order of 10, a 25 ms analysis window and a time step of 5 ms.

No intra-phoneme gender differences were found. Values computed on recordings made in a soundproof booth did not significantly differ from those computed on recordings carried out in a quiet office. This highlights the clinical usability of the spectral moment measures, as clinicians do not usually have access to a soundproof booth. Midpoint measures allowed for most distinctions between phonemes, yielded values that were the most in line with data from the literature, and also minimize the risk of coarticulatory « contamination ».

Fig. 5 summarizes which spectral moment(s) taken at the consonant midpoint differ between the places of articulation in voiced and voiceless fricatives and plosives, respectively. No spectral moment differentiates voiced/voiceless phoneme pairs.

Overall, CoG and SD seem to be the most clear-cut measures. CoG is significantly lower in palatal plosives, and higher in alveolar fricatives. SD, on the other hand, is significantly low in both labiodental fricatives and bilabial plosives.

SKEW must be interpreted with caution especially in fricatives, in light of their particular spectral shapes. KURT, in addition to showing numerous outliers, as well in fricatives as in plosives – which leads to question its reliability when taken on its own –, also seems less sensitive than SD in fricatives. Hence, a combination of both pairs COG-SD and SKEW-KURT appears to be the best recommendation. These findings are relevant for further studies, inter alia for investigating the use of spectral moments in French pathological speech.

The next steps of this project are:

- Compare spectral moment values resulting from manual segmentation vs. forced alignment in healthy talkers

- Compare spectral moment values in a controlled sentence environment vs. in the more functional reading passage « La chèvre de monsieur Seguin »

- Compare values in healthy talkers vs. in patients treated for oropharyngeal cancer

- Apply the measure on the new standardized reading passage created in the working group

- Build a visual tool using a combination of acoustic measures, among which the spectral moments

Contributors

Projects

This PhD thesis is carried out as part of the Training Network on Automatic Processing of PAthological Speech (TAPAS): https://www.tapas-etn-eu.org/

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under Marie Skłodowska-Curie grant agreement No 766287.

Main publications

Pommée, T, Maryn, Y, Finck, C, Morsomme, D. Validation of the Acoustic Voice Quality Index, Version 03.01, in French. Journal of Voice (in press). https://doi.org/10.1016/j.jvoice.2018.12.008

Pommée, T, Maryn, Y, Finck, C, Morsomme, D. The Acoustic Voice Quality Index, Version 03.01, in French and the Voice Handicap Index. Journal of Voice (in press). https://doi.org/10.1016/j.jvoice.2018.11.017

Pommée, T, Woisard, V, Mauclair, J, Pinquier, J. Pertinence clinique des mesures d’intelligibilité de la parole (poster). In : Ecole d’été en Logopédie-Orthophonie (EDE 2019), Liège Université, 01/07/2019-05/07/2019.

Pommée, T, Mauclair, J, Woisard, V, Farinas, J, Pinquier, J. Génération de la « banane de la parole » en vue d’une évaluation objective de l’intelligibilité (poster). In : Journées de Phonétique Clinique (JPC 2019), Université de Mons, 14/05/2019-16/05/2019, Véronique DELVAUX, Kathy HUET, Myriam PICCALUGA, Bernard HARMEGNES (Eds.), CIPA : Centre international de Phonétique Appliquée, p. 107-108, mai 2019.

Balaguer, M, Pommée, T, Farinas, J, Pinquier, J, Woisard, V, Speyer, R. Effects of oral and oropharyngeal cancer on speech intelligibility using acoustic analysis: Systematic review. Head & Neck (in press). https://doi.org/10.1002/hed.25949

References

Abry, C., [b]-[d]-[g] as a universal triangle as acoustically optimal as [i]-[a]-[u], The 15th International Congress of Phonetic Sciences, Barcelone, 2003, 727-730.

Auzou, P. (1998). Évaluation clinique de la dysarthrie. Orthoédition.

Béchet, M., Ferbach-Hecker, V., Hirsch, F., Sock, R., F2/F3 of voiced plosives in VCV sequences in children with cleft palate: an acoustic study, International Seminar on Speech Production, Montréal, 2011, 65-73.

Dittner, J., Lepage, B., Woisard, V., Kergadallan, M., Boisteux, K., Robart, E., & Welby-Gieusse, M. (2010). Élaboration et validation d’un test quantitatif d’intelligibilité pour les troubles pathologiques de la production de la parole. Revue de Laryngologie Otologie Rhinologie, 131(1), 9–14.

Durieux, N, Etienne, A.-M., Willems, S. (2017). Introduction à l’evidence-based practice en psychologie. Journal des Psychologues, 345, 16-20.

Fant, G., Speech sounds and features, MIT Press, 1973.

Fontan, L. (2012). De la mesure de l’intelligibilité à l’évaluation de la compréhension de la parole pathologique en situation de communication. Université Toulouse 2 Le Mirail.

Ghio, A., Giusti, L., Blanc, E., Pinto, S., Lalain, M., Robert, D., … Woisard, V. Quels tests d’intelligibilité pour évaluer les troubles de production de la parole ? Journées d’Etude sur la Parole, Paris, 2016, 589–596.

Ghio, A., Lalain, M., Giusti, L., Pouchoulin, G., Robert, D., Rebourg, M., … Woisard, V. (2018). Une mesure d’intelligibilité par décodage acoustico-phonétique de pseudo-mots dans le cas de parole atypique. XXXIIe Journées d’Études Sur La Parole, 285–293. https://doi.org/10.21437/jep.2018-33

Gurevich, N., & Scamihorn, S. L. (2017). Speech-language pathologists’ use of intelligibility measures in adults with dysarthria. American Journal of Speech-Language Pathology, 26(3), 873–892. https://doi.org/10.1044/2017_AJSLP-16-0112

Hustad, K. C. (2008). The Relationship Between Listener Comprehension and Intelligibility Scores for Speakers With Dysarthria. Journal of Speech, Language, and Hearing Research, 51(3), 562–573. https://doi.org/10.1044/1092-4388(2008/040)

Jackson, PJ.B., Acoustic cues of voiced and voiceless plosives for determining place of articulation, CRAC workshop, Aalborg, 2001, 19-22.

Jan, M. (2007). L’évaluation instrumentale de la dysarthrie en France. In P. Auzou, V. Rolland-Monnoury, S. Pinto, & C. Ozsancak (Eds.), Les dysarthries (pp. 259–269). Marseille : Solal.

Johnson, K., Ladefoged, P., Lindau, M., Individual differences in vowel production. Journal of the Acoustical Society of America, 1993, Vol 94(2), 701-714. https://doi.org/10.1121/1.406887

Kent, Ray D., Weismer, G., Kent, J. F., & Rosenbek, J. C. (1989). Toward Phonetic Intelligibility Testing in Dysarthria. Journal of Speech and Hearing Disorders, 54(4), 482–499. https://doi.org/10.1044/jshd.5404.482

Kent, Raymond D. (1992). Intelligibility in Speech Disorders (Raymond D. Kent, Ed.). https://doi.org/10.1075/sspcl.1

Klangpornkun, N., Onsuwan, C., Tantibundhit, C., & Pitathawatchai, P. (2013). Predictions from “speech banana” and audiograms: Assessment of hearing deficits in Thai hearing loss patients. The Journal of the Acoustical Society of America, 134(5), 4132–4132. https://doi.org/10.1121/1.4831179

Owren, M. J., & Cardillo, G. C. (2006). The relative roles of vowels and consonants in discriminating talker identity versus word meaning. The Journal of the Acoustical Society of Amer, 119(3), 1727–1739. https://doi.org/10.1121/1.2161431

Stevens, K. N., & Blumstein, S. E. (2005). Invariant cues for place of articulation in stop consonants. The Journal of the Acoustical Society of America, 64(5), 1358–1368. https://doi.org/10.1121/1.382102

Turner, G. S., Tjaden, K., & Weismer, G. (2014). The influence of speaking rate on vowel space and speech intelligibility for individuals with amyotrophic lateral sclerosis. Journal of Speech, Language, and Hearing Research, 38(5), 1001–1013. https://doi.org/10.1044/jshr.3805.1001

Woisard, V., Espesser, R., Ghio, A., & Duez, D. (2013). De l’intelligibilité à la compréhensibilité de la parole, quelles mesures en pratique clinique ? Rev Laryngol Otol Rhinol, 134(May 2017), 27–33.

Yorkston, K. M., Strand, E. A., & Kennedy, M. R. T. (1996). Comprehensibility of Dysarthric Speech. American Journal of Speech-Language Pathology, 5(1), 55–66. https://doi.org/10.1044/1058-0360.0501.55