Temporal Relation Matrix

Context

Feature extraction constitutes the first level of audiovisual content analysis. Basic characteristics are available through segmentation results and can be considered as low level descriptors. Our goal is to reach a higher level and propose new sets of descriptors related to relevant semantic and structural events occurring in the audiovisual content. This challenging issue can be addressed in different ways. We propose a generic and not supervised method based on the temporal analysis of audiovisual content. Defined in [Ibrahim 2007] this method is :

- Generic because independent of the media (audio or video), of content types (broadcast news, sport video, TV games, movies …) or semantic event types (interviews, game phases, …)

- Not supervised because without a priori knowledge on the audiovisual content. We try to make some semantic events emerge from available sets of basic features by following a data mining approach

- Based on the temporal relations since time is the common thread among all the available basic data, regardless of their type.

Overview

Parametric representation of a temporal relation

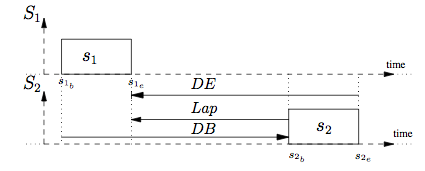

Considering a pairwise of temporal segmentations (S1 and S2), provided by two feature extraction processes, we compute a set of three parameters DE,DB and Lap [Moulin] between each couple of segments (s1,s2) that are present in the same temporal window and belong to (S1 x S2), as shown in figure 1. Any temporal relation is totally described by these three algebraic values.

Figure 1: Temporal relation parameters

Temporal Relation Matrix

Each temporal relation can be considered as :

- a point in the 3-parameter space

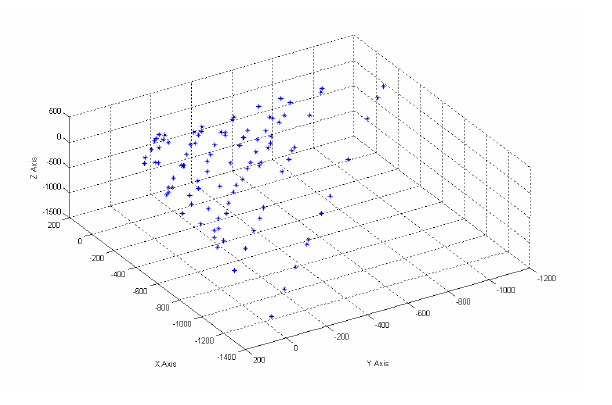

- an element of a three-dimensional matrix in which the temporal relation number of occurrences is cumulated.

A 3-dimensional representation of this vote matrix, called Temporal Relation Matrix (TRM), can be obtained as shown in figure 2. A TRM is computed between each pairwise of available temporal segmentations. The audiovisual content is then represented by this set of TRM.

Figure 2: Example of 3-dimensional representation of a TRM

TRM Analysis

Relevant information cannot be directly extracted from this set of TRM. Their content must be analyzed. The first step in this analysis involves the identification of classes of temporal relations, bringing together relations that share similar characteristics. This can be done using classification algorithm (K-means, Fuzzy C-means, …) or by defining constraints on the three parameters.

Main goals

From the classes of temporal relations identified, analytical work can be carried out, for example by focusing on the extraction of some relevant patterns that are characteristic of the audiovisual content. These patterns will help not only to refine the semantic content and to represent the content structure, but also to cluster audiovisual documents that share common or similar patterns.

Application

This method is used in the context of the EPAC project (lien) and its structuring and aggregation task. This project focuses on conversational speech processing and aims at proposing enriched annotations of large set of audio data. The TRM analysis and the pattern extraction help to detect and characterize conversational speech zones.

Projects

EPAC Project (ANR 2006 Masse de Données – Connaissances Ambiantes): Mass Audio Documents Exploration for Extraction and Processing of conversational speech

References

Moulin,B., Conceptual-graph approach for the representation of temporal information in discourse, Knowledge-Based Systems, September 1992, Vol 5, n°3, pp. 183-192.

Contributors

- Zein Al Abidin Ibrahim

- Isabelle Ferrané (contact)

- Philippe Joly

- Benjamin Bigot

Main Publications

Zein Al Abidin Ibrahim, Isabelle Ferrané, Philippe Joly. Conversation Detection in Audiovisual Documents: temporal Relation Analysis and Error Handling. Dans : International Conference on Information Processing and Management of Uncertainty in Knowledge-based Systems (IPMU 2006), Paris, France, 02/07/2006-07/07/2006, EDK Editions médicales et scientifiques, (support électronique), 2006.

Zein Al Abidin Ibrahim, Isabelle Ferrané, Philippe Joly. Audio data analysis using parametric representation of temporal relations. Dans : IEEE International Conference on Information and Communication Technologies: from Theory to Applications (ICTTA 2006), Damascus, Syria, 24/04/2006-28/04/2006, IEEE, (support électronique), avril 2006.

Zein Al Abidin Ibrahim, Isabelle Ferrané, Philippe Joly. Temporal Relation Analysis in Audiovisual Documents for Complementary Descriptive Information. Dans : Third International Workshop on Adaptative Multimedia Retrieval (AMR 2005), Glasgow, UK, 28/07/2005-29/07/2005,M Detyniecki, J.M Jose, A Nurnberger, C.J. vam Rijisbergen (Eds.), LNCSSpringer-Verlag GmbH (Computer Science: Vol 3877/2006 – pp. 141-154),ISBN: 3-540-32174-8, juillet 2005.

Zein Al Abidin Ibrahim, Isabelle Ferrané, Philippe Joly. Temporal Relation Mining between Events in Audiovisual documents. Dans : Fourth International Workshop on Content-Based Multimedia Indexing (CBMI 2005), Riga, Latvia, 21/06/2005-23/06/2005, SuviSoft Oy Ltd., ISBN 952-15-1364-0, juin 2005.

Zein Al Abidin Ibrahim. Caractérisation des Structures Audiovisuelles par Analyse Statistique des Relations Temporelles. Thèse de doctorat, Université Paul Sabatier, juillet 2007.