Automated Audio Captioning

Context

In recent years, new deep learning systems have been significantly improved for text generation, processing and understanding, leading to the use of free-form text as a global interface between humans and machines. In sound event recognition, most of the tasks are using a predefined set of classes, but human natural language can contain much more information, which could improve machine understanding of our world.

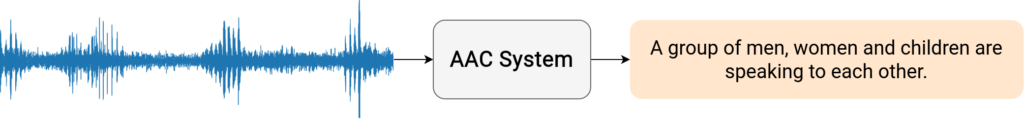

This idea led to the creation of the Automated Audio Captioning (AAC) task, which appeared in 2017 [1]. This task aims to create systems that generate a sentence written in natural language describing a single audio file.

The audio can contain diverse types of sound events (speech, human actions, natural, domestic, urban, music, sound effects, …) of different lengths (1 to 30s), recorded with different devices and in different acoustic scenes. The description is written by human and any kind of detail in the audio, with temporal or spatial relations between them (“followed by”, “in the background”, …) or different characterizations (“continuously”, “muffled”, “low”, …).

Since the descriptions are written by humans, we need to consider different words used to describe similar sounds (“rain is falling” / “pouring” / “flowing” / “trickling”), different sentence structures (“a door that needs to be oiled” / “a door creaking” / “a door with squeaky hinges”), subjectivity (“man speaks in a foreign language”), high-level descriptions (“a vulgar man speaks”, “unintelligible conversation”, …), or vagueness (“someone speaks” instead of “a man gives a speech over a reverberating microphone”).

Public datasets

In order to train AAC systems, the research community has created several datasets where each audio file is described by humans. Here we present the two most commonly used for English captioning.

Clotho [2] : A small dataset with 6972 records with 5 different captions describing each one. The audio files are downloaded from Freesound website [3] and contain various sound events (except music). Audio files last for 15 to 30 seconds, and caption length is between 8 and 20 words. The train subset contains 3,840 files for 24.0H of total audio duration and contains 19,195 captions with 217,362 words.

AudioCaps [4] : The largest public dataset, with 51,308 audio files from the AudioSet dataset [5] that contains audio clips from YouTube [6] videos. The audio files are sampled to 32KHz and most of them last for ten seconds. In the training subset, audio files form a total duration of 136.6 hours. Unlike Clotho, each file is described by a single caption in the training subset and by five captions in the validation and test subsets. The training part contains a total of 402,482 words, with caption lengths ranging from 2 to 52 words.

Metrics

The metrics used to evaluate ours models comes from machine translation and image captioning tasks. We name the sentence produced by our model for an audio file the “candidates” while the ground-truth sentences are called the “references”.

The first three machine translation metrics are BLEU [7], METEOR [8] and ROUGE-L [9]. The BLEU and METEOR metrics are based on overlapping N-grams between the candidate sentence and the references ones. ROUGE-L is simply based on the Longest Common Subsequence algorithm between the candidate and references.

The captioning metrics are CIDEr-D [10], SPICE [11] and SPIDEr [12]. The CIDEr-D metric is the cosine similarity of the TF-IDF score of the n-grams, which take into account the frequency of each n-gram produced in order to evaluate the sentence. SPICE is based on the F-score on the edges of a semantic-graph built from proposition extracted by a parser and handcrafted grammar rules. This allows the metric to recognize topics in the candidate and tries to match them with the references ones. Finally, the SPIDEr metric is the average of CIDEr-D and SPICE and is mainly used to rank AAC systems.

Participations to DCASE

Since 2020, the annual DCASE challenge [13] proposes a task dedicated to audio captioning to motivate research around this area. Most models proposed comes from Audio tagging, Automatic Speech Recognition or Text generation architectures. They adopt an encoder-decoder structure, where the encoder takes the audio file as input and produce an audio-representation. Then the decoder take as input this representation and the previous words in order to predict the next word.

2020

The first IRIT approach for DCASE2020 [14] was the model Listen-Attend-Spell, originally designed for ASR. This model is composed by a pyramidal bi-LSTM network as encoder and an attention-based LSTM decoder to produce the caption. This system reached 0.124 of SPIDEr score, where the baseline of the challenge achieved 0.054.

2021

In our DCASE2021 submission [15], we kept the same recurrent decoder and use a pre-trained convolutional encoder from the Pretrained Audio Neural Networks study (PANN). This model was originally trained to classify sound events on AudioSet dataset, which make the detection of audio events easier and improve drastically the performance. This system achieved a SPIDEr score of 0.231 on the evaluation subset.

2022

Finally, we proposed for DCASE2022 in [16] to change our decoder part and to study the performance of our model when using stochastic decoding methods. The decoder is now a standard transformer decoder [17] which help the model to capture relation between words in captions with attention mechanisms. The stochastic decoding methods are made to help the model to generate more diverse content. They are based on sampling the next word generated by our model using the output probabilities. However, these approaches tend to produce syntactically and semantically incorrect sentences, which can decrease drastically our scores. This system achieved a SPIDEr score of 0.241 on the evaluation subset.

References

[1] K. Drossos, S. Adavanne, and T. Virtanen, Automated Audio Captioning with Recurrent Neural Networks. arXiv, 2017. doi: 10.48550/ARXIV.1706.10006. [Online]. Available: https://arxiv.org/abs/1706.10006.pdf

[2] K. Drossos, S. Lipping, and T. Virtanen, “Clotho: An Audio Captioning Dataset,” arXiv:1910.09387 [cs, eess], Oct. 2019, Accessed: May 31, 2021. [Online]. Available: http://arxiv.org/abs/1910.09387

[3] Freesound website: https://freesound.org/

[4] C. D. Kim, B. Kim, H. Lee, and G. Kim, “AudioCaps: Generating captions for audios in the wild,” in Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). Minneapolis, Minnesota: Association for Computational Linguistics, Jun. 2019, pp. 119–132. [Online]. Available: https://aclanthology.org/N19-1011

[5] J. F. Gemmeke, D. P. W. Ellis, D. Freedman, A. Jansen, W. Lawrence, R. C. Moore, M. Plakal, and M. Ritter, “Audio Set: An ontology and human-labeled dataset for audio events,” in 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). New Orleans, LA: IEEE, Mar. 2017, pp. 776–780. [Online]. Available: http://ieeexplore.ieee.org/document/7952261/

[6] YouTube website: https://youtube.com/

[7] K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “BLEU: a method for automatic evaluation of machine translation,” in Proceedings of the 40th Annual Meeting on Association for Computational Linguistics – ACL ’02, 2001, p. 311. doi: 10.3115/1073083.1073135. [Online]. Available: https://aclanthology.org/P02-1040/

[8] M. Denkowski and A. Lavie, “Meteor Universal: Language Specific Translation Evaluation for Any Target Language,” in Proceedings of the Ninth Workshop on Statistical Machine Translation, 2014, pp. 376–380. doi: 10.3115/v1/W14-3348. [Online]. Available: https://aclanthology.org/W14-3348/

[9] C.-Y. Lin, “ROUGE: A Package for Automatic Evaluation of Summaries,” in Text Summarization Branches Out, Jul. 2004, pp. 74–81. [Online]. Available: https://aclanthology.org/W04-1013/

[10] R. Vedantam, C. L. Zitnick, and D. Parikh, “CIDEr: Consensus-based Image Description Evaluation,” arXiv:1411.5726 [cs], Jun. 2015, arXiv: 1411.5726. [Online]. Available: http://arxiv.org/abs/1411.5726

[11] P. Anderson, B. Fernando, M. Johnson, and S. Gould, “SPICE: Semantic Propositional Image Caption Evaluation,” arXiv:1607.08822 [cs], Jul. 2016, arXiv: 1607.08822. [Online]. Available: http://arxiv.org/abs/1607.08822

[12] S. Liu, Z. Zhu, N. Ye, S. Guadarrama, and K. Murphy, “Improved Image Captioning via Policy Gradient optimization of SPIDEr,” 2017 IEEE International Conference on Computer Vision (ICCV), pp. 873–881, Oct. 2017, arXiv: 1612.00370. [Online]. Available: http://arxiv.org/abs/1612.00370

[13] DCASE website: https://dcase.community/

[14] T. Pellegrini, “IRIT-UPS DCASE 2020 audio captioning system,” DCASE2020 Challenge, techreport, Jun. 2020. [Online]. Available: https://dcase.community/documents/challenge2020/technical_reports/DCASE2020_Pellegrini_131_t6.pdf

[15] E. Labbé and T. Pellegrini, “IRIT-UPS DCASE 2021 Audio Captioning System,” DCASE2021 Challenge, techreport, Jul. 2021. [Online]. Available: https://dcase.community/documents/challenge2021/technical_reports/DCASE2021_Labbe_102_t6.pdf

[16] E. Labbé, T. Pellegrini, and J. Pinquier, “IRIT-UPS DCASE 2022 task6a system: stochastic decoding methods for audio captioning,” DCASE2022 Challenge, techreport, Jul. 2022. [Online]. Available: https://dcase.community/documents/challenge2022/technical_reports/DCASE2022_Labbe_87_t6a.pdf

[17] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is all you need,” CoRR, vol. abs/1706.03762, 2017. [Online]. Available: https://arxiv.org/abs/1706.03762