Segmentation in singer turns

Context

As part of the DIADEMS project on indexing ethno-musicological audio recordings, segmentation in singer turns automatically appeared to be essential. In our study, we present the problem of segmentation in singer turns of musical recordings and our experiments in this direction by using the MFCC features and exploring a method based on the Bayesian Information Criterion (BIC), which are used in numerous works in audio segmentation, to detect singer turns. The BIC penalty coefficient was shown to vary when determining its value to achieve the best performance for each recording. In order to avoid the decision about which single value is best for all the documents, we propose to combine several segmentations obtained with different values of this parameter. This method consists of taking a posteriori decisions on which segment boundaries are to be kept. A gain of 7.1% in terms of F-measure was obtained compared to a standard coefficient.

Overview

The Bayesian Information Criterion

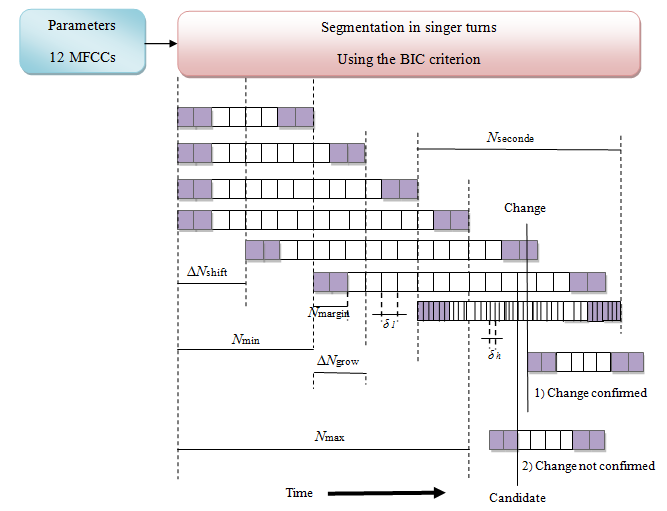

The BIC implementation involves determining two important parameters: the size of the signal window N in which a border of segment is searched, and a penalty factor λ. As a first step, we sought to determine the value of these two parameters on a subset of our corpus. A first version of the algorithm was based on the work of El-Khoury in which the window size was constant [1]. However, none single optimal value for all our recordings was satisfactory. We then implemented a version of the algorithm in which the size of the analysis window increases while no potential boundary is found. This method is based on studies in speech segmentation [2] and is illustrated in Figure 1.

Figure 1: Illustration of the singer turns algorithm adapted from [3]

The algorithm has two stages, involving two different temporal resolutions:

- The initial length of the analysis window is set to Nmin. If no segment change is detected within this window, its size is increased by ΔNgrow until a max size Nmax is reached. The ΔBIC values are calculated at regular intervals with sampled values of t, namely once every δ1 observations. If no boundary is detected when Nmax is reached, the analysis window is shifted by ΔNshift observations and this step is repeated.

- If a potential boundary is detected, a window of length Nsecond is centered on this boundary and ΔBIC values are recomputed within this window with a high resolution, namely once every δh observations to refine the position of this boundary.

Segmentation is performed by a bidirectional method. The algorithm is executed twice on each recording. A forward pass is followed by a backward pass, which acts as verification pass. F-measure is usually increased by running a backward pass.

Relevance of the penalty coefficient λ

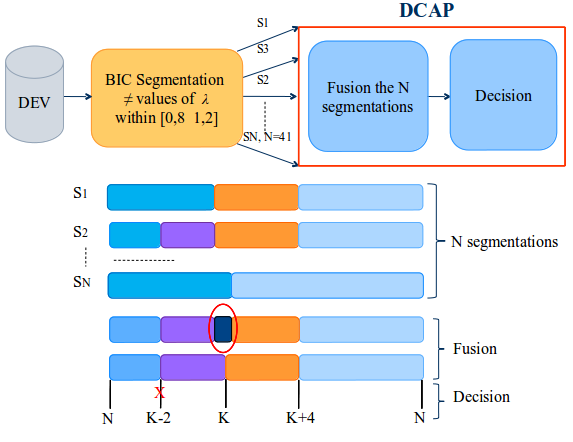

The adjustment of the penalty factor has proved difficult becouse of its sensitivity to the changing of record conditions and content. In order to remedy this problem of variability, it became necessary to relax this constraint, by reasoning systematically on several values of λ and by confronting several segmentations. For this, we have proposed the DCAP method.

Consolidated A posteriori Decision

In order to avoid the problem of variability and the a priori choice of the penalty coefficient value, we first obtain several segmentations by varying its value within the interval [0.8 1.2] with a step of 0.01. Second, a vote is carried out on the candidates obtained: a boundary is validated if it was found by at least S0 segmentations among all the segmentations. A tolerance gap of 0.5 s was used for this purpose. Therefore, we speak of a Consolidated Decision A Posteriori (DCAP) strategy which is illustrated in Figure 2. The value of S0 was determined on a DEV set and its obtained value is equal to 15. The global performances obtained by DCAP is 61% in terms of F-measure on the EVAL set.

Figure 2: Consolidated Decision A Posteriori strategy

Contributors

Régine André-Obrecht (contact)

Projects

Main Publications

M. Thlithi, T. Pellegrini, J. Pinquier, R. André-Obrecht, P. Guyot. Application du critère BIC pour la segmentation en tours de chant. In: JEP’2014, Journées d’Études sur la Parole.

M. Thlithi, T. Pellegrini, J. Pinquier, R. André-Obrecht. Segmentation in singer turns with the Bayesian Information Criterion. In: Conference of the International Speech Communication Association (INTERSPEECH’2014), Singapour, september 2014.

References

[1] El-Khoury, E. and Sénac, C. and Pinquier, J. (2009). Improved speaker diarization system for meetings. In Proc. International Conference on Acoustics, Speech, and Signal Processing, Taipei, pp. 4097-4100.

[2] André-Obrecht, R. (1998). A new statistical approach for the Automatic Segmentation of Continuous Speech Signals, IEEE Transactions on Audio, Speech, and Language Processing, vol. 36-1, pp. 29-40.

[3] Cettolo, M. and Vescovi, M. and Rizzi, R. (2005). Evaluation of BIC-based algorithms for audio segmentation. In Computer Speech And Language, pp. 147-170.